Though K8s enables us to deploy our applications as containers on a set of machines configured as workers/minions in a cluster, however it does’nt deploy the containers directly on the nodes…. but on Pods

A Pod is not a process, but an environment for running container(s).

The containers are encapsulated into a k8s object called ‘Pods’. K8s manages the pod, rather than the containers directly.

Table of Contents

- What is a Pod?

- Why Pods?

- Working with Pods

- how to deploy pods – cli

- how to deploy pods – using yaml configuration file

What is a Pod?

Pod is the smallest unit of execution that can be created in k8s. In it’s simplest form, pod is a single instance of an application, running as a (docker or similar runtime engine) container.

K8s officially describes ‘A Pod (as in a pod of whales or pea pod) is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers’

Pods have 1-to-1 relationship with application containers and acts as a wrapper that provides

– unique IP address to communicate with each other

– environmental dependencies

– persistent storage volumes

– configuration data need to run the application instance.

By nature Pods have been designed to be ephemeral i.e. if a pod (or the node it executes on) fails, k8s automatically creates a new replica. A Pod persists until it is deleted

Why Pods?

Benefit of creating a Pod is that it encapsulates an application constituent of multiple tightly-coupled cooperating processes (as containers) that need to share resources. These co-located containers are represented as a single coherent unit of service. The management of this single-unit becomes so much easier, than managing all the individual components.

Pods provide the much required modularity – whether it’s management, logging/reporting or replication.

- Typically Pod represents the process running on a cluster. By limiting pods to a single process, k8s can report on the metrics/ health of each process

- Multiple containers inside a pod have a simplified communiation & data sharing i.e. communicate via localhost, as they share the same network

- Pods can communicate amongst them by using each others unique IP address or referencing resources that reside in them

- Pods can include Init or SideCar Containers that run when pod is started (to provide initiation/ configs) alongside application container – automatically managing their relationship, syncing their execution & termination etc.

- Pods simplify scalability to enable replica container sets (app + init/ sidecar) to be created or destroyed automatically based on load requirements

Working with Pods

Wether the Pods are created directly (by user) or indirectly (by controllers like Deployment, StatefulSet, DaemonSet), the Pod is scheduled to run a Node in the cluster. Pod ‘lives’ on the node until

– pod finishes execution

– pod object is deleted

– pod is eviced due to resource crunch i.e. cpu, memory etc

– node failure

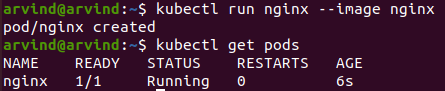

how to deploy Pods – cli

kubectl run ngnix –image nginx

kubectl get pods

Kubectl deploys a docker container by creating a pod & then placing an instance of the Nginx docker image, downloaded from the docker hub.

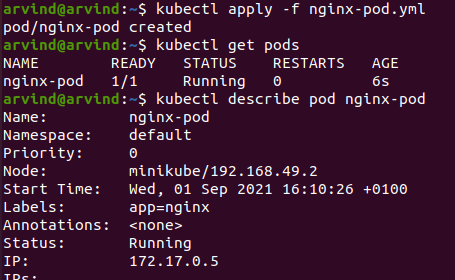

how to deploy Pods – using a configuration file

Kubernetes uses yaml files as inputs for creation of various objects. Broadly speaking the yaml takes 4 fields:

apiVersion – version of kubectl API

kind – what object needs to be created

metadata – data about object like name, label

spec – provide configuration to kubernetes as per object

Here I’m using a simple nginx-pod.yml to create a pod

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

More details about pod lifecyle, types of pods, status of pod objects etc. in later posts

till then Learn… Share… Grow…

Speak Your Mind